Andy's Blog

Monday, July 17, 2017

Can I Blend?

The reasons for my dislike of Maya were myriad, but the thing that made me finally switch to Blender was when I lost access to the modelling tools introduced in Maya 2015 after moving company and having to use an older version. In addition to the lack of good modelling tools, I had to get Maya installed on my newly built computer, after first getting my boss to remove it from an unused computer. Not only was it a hassle to get my boss to do this remotely, I couldn't even download Maya 2013 from the Autodesk website to install it, and had to download a torrent, virus check it and then use the legitimate license keys to finally get it installed, instead of the included crack - yes, the pirates provide a better user experience than Autodesk.

I'd tried Blender before, but was so turned off by the default right-click selection I didn't give it a chance, but when I did overcome my initial aversion and learn how to use (and customise) it, I discovered that Blender not only has very nice modelling tools, but good UV unwrapping tools, usable sculpting and retopo, a very nice unbiased renderer (Cycles), good animation features, python scripting and (importantly) an active, open development community and enthusiastic user base. Among the many factors that convinced me that I should keep Blender at the heart of my 3d workflow was that I know that I will never lose access to features and will never be restricted in how many installations I can have, or where I can install it.

Over the two years I have used Blender, I have gradually become more and more interested in its development, and actively follow prominent developers and users via forums, Twitter and YouTube. The Blender community is full of generous people giving their time and experience to improve Blender and share knowledge of how to use it, and although core developers are paid salaries, this all comes from donations provided by users and companies that benefit from Blender in some way. It's amazing to see that despite working on what I doubt is even 1% of the budget of a large company like Autodesk, Blender is still able to keep up with and even best its commercial competitors in many areas. Blender is frequently updated, with new versions being released two to three times a year, in addition to the developmental builds that allow users to test out new features way before a new version is released. In addition, since Blender is open source, some users even make special builds, bringing together experimental or in-development features from different sources that are not always in official builds.

Since I was recently asked by a colleague for some good learning resources for Blender, I'll share them here.

CG Masters - They do some paid courses, but there are a couple of free videos that are great for Blender beginners, such as the Thor's Hammer modelling tutorial.

CG Cookie - Whilst you can find a few free videos, most of the content is paid, but the courses are good and guide you through a topic in depth. The recently released robot character modelling tutorial looks excellent.

Blender Guru - There are a ton of free videos on how to do specific things in Blender, as well as those explaining some more general concepts, such as lighting, materials and even personal development.

Creative Shrimp - Gleb Alexandrov is well known in the Blender community not just for his cool artwork, but also his awesome tutorials. He tends to cover subjects quite concisely, but reveals many techniques and different ways of achieving results, including tricks to speed up rendering and fake things for artistic effect.

Zacharias Reinhardt - Zacharias has made some great videos on sculpting and retopo, as well as a few very helpful videos full of useful Blender tips.

Blender Stack Exchange - Probably the best place to find answers to specific Blender problems you may have, and if nobody else has asked first, you can always ask yourself.

Blender Artists - A good place to hang out to find out Blender related news and talk to other Blender fans.

Blender Cloud - A paid service run by the Blender Institute that gives users access to tutorials, textures, hdri maps, all the open movie assets and more. Some of the content is available for free, but most is subscription only. Subscription also gives you a little bit of storage space for personal projects and some other features, but I just signed up as a way to contribute to the open movies and development of Blender.

A quick tip when you are looking for Blender help via Google search is to narrow your search results down to the last year or two, as very old results, including links to the Blender 2.4 manual have a tendency to populate the top results if you don't take this extra step. Also, the most reliable way to get to the latest version of the Blender manual is to open it via the Blender Help menu or splash screen, since you will always get the version of the manual for your Blender version.

As an aside, I recently tried to pass the aforementioned Maya license to someone else as I no longer use it, but the number of license activations had been exceeded, so now the legitimately bought $3000 software is useless, instead of merely outdated. Even though the last few years of Maya updates have added a lot of great tools, I'm pretty sure I won't go back to it, as I don't really need anymore. Although we supplement Blender with more specialised programs, such as ZBrush and Substance, now everyone in the studio I work at is using, or learning Blender.

Wednesday, June 29, 2016

More Wacom Issues

After a bit of searching, I found that all the annoying touch rings and graphical feedback annoyances are the result of Windows Ink, a bit of crap software that is part of Windows to be a generic system for graphics tablets, touch screens and the like. It turns out that this can be disabled in the pen mapping section of the Wacom drivers settings. However, to my dismay, disabling Windows Ink in the Wacom driver settings causes touch sensitivity to stop working in Photoshop. This is pretty much the worst trade-off ever - have input lag and annoying feedback rings on every brush stroke, or have no touch sensitivity. Well, it turns out that there is a solution, and I'm going to write it up here more of as a note to my future self (reinstalling Windows is something I seem to do once every year or two), but if it helps anyone else with Wacom troubles then that's great.

I found this tip over at the Wacom forums here.

Because Photoshop now uses Windows Ink, we lose touch sensitivity if we disable it in the Wacom pen settings. Fortunately, Adobe have left an option in to enable the old tablet support, which allows us to disable Windows Ink and still have touch sensitivity. Here are the steps to fixing Windows Ink related problems.

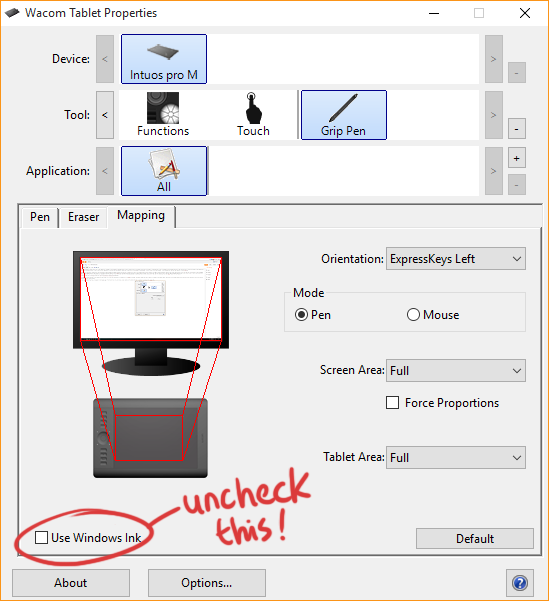

1. Open Wacom Tablet Properties and navigate to the Mapping page of the Grip Pen tool. You will notice a small checkbox in the bottom left that is labelled "Use Windows Ink". Uncheck this.

2. Now open Notepad or any other text editor and enter the following in a new file:

# Use WinTab

UseSystemStylus 0

Save this file as

Users/[username]/AppData/Roaming/Adobe/Adobe Photoshop [version]/Adobe Photoshop [version] Settings/PSUserConfig.txt

3. Open or restart Photoshop and try drawing. Hopefully you will now have both no touch rings, modifier key tooltips or other distracting stuff AND touch sensitivity will work.

Note that I have only tested this tip on Windows 10 with Photoshop Creative Cloud 2015.5, and the original post this tip is lifted from seemed to be for CC 2014. Hopefully this option will not disappear in a future update. Fingers crossed!

Monday, September 22, 2014

A message to Wacom. Your drivers still suck. Please fix them.

I know that almost nobody will read this, let alone someone that works at Wacom and cares about the drivers, but after 6 years of using Wacom products and still having a whole range of issues with their drivers, I need to write this down for my own sanity. This will focus on the driver experience with Windows, since that is where I spend almost all my time using their Intuos products.

1. Make the driver automatically disable Windows Pen + Touch 'enhancements'. This is by far the most crazy thing about Wacom's drivers in my opinion. When you first set up your Wacom, Windows thinks you are trying to use a touch screen, and even if it thinks you are using a tablet, it seems to think you're an office worker using it for writing memos... As a result, Windows enables a bunch of useless helpers that make using the tablet utterly frustrating. There are ripples when you touch the pen on the tablet, there's a hand-writing recognition toolbar that hides at the side of the screen, waiting to pop up as soon as Windows detects you are trying to enter text (never mind that you have a fucking keyboard), and on top of that, there's a menu that appears on long presses, so forget trying to draw a stroke in a graphics application - why would you want to do that anyway? Stop doodling and get back to work!

Fortunately, there are fixes for this that make disabling all this horrible stuff very easy (here), but Wacom should be doing these things when you install the drivers, perhaps giving an option that says "Wait! I bought this tablet because I can't figure out how to use the keyboard and want to use my computer as a horrible memo pad" for the minority that aren't going to be drawing.

2. Make an option that completely disables touch strips, buttons and wheels in a single click. I'll admit that the buttons can be useful to have, but the touch wheel is so easily activated by the side of one's hand brushing against it, that it should be easier to just disable everything. Wacom did recently add some check boxes that go a long way to making this easier, but for those who just want the pen and drawing surface, it would be very helpful to have a setting that nukes all these novelties.

3. Make the driver not randomly forget all the settings. This happens so often, and has happened since I used an Intuos 3 that I can't believe Wacom hasn't fixed it. Sometimes I'll start up and touch will be enabled, and the side buttons will be making horrible menus pop up all over the place if I so much as breath on them. Then I'll go into the settings and find out that everything has just reset for some reason. Given that there is no way to set up the Wacom exactly how I want it very quickly, this is extremely annoying.

4. The Wacom Desktop Center absolutely does not need to load with Windows. Please disable autostart by default.

5. Why are there two separate settings tools (Desktop Center and Tablet Properties)? Can't you merge them into one amazing app that doesn't forget its settings or automatically load when Windows starts?

6. Make the tail switch default to right and middle mouse. Actually, now that I think about this, double-clicking with a Wacom pen is kind of annoying, since you almost certainly end up moving the cursor a couple of pixels when you lift the pen before the second click, but having no middle mouse and having to use a modifier key or revert to the mouse makes me very sad. Then again, thinking about it further, Photoshop doesn't use the middle mouse button, and Illustrator doesn't even use the right mouse button, so perhaps there is less demand for this than I am estimating. I use my Wacom for everything aside from games (where it usually doesn't work properly) mainly because it doesn't hurt my wrists after using it all day, so I guess I'm probably in the minority here. At least I can change it until the drivers forget all my settings again.

When my Wacom tablet is working properly, it's great, so it would be awesome to see some of these issues resolved after all this time. Finally, how about a plain pen & tablet with no buttons and a larger drawing surface? Please!

Saturday, September 13, 2014

Update to ErgServer (Formerly known as ErgAmigo)

After RowJam I was left rather unsatisfied with the state of the back end code that handled the communication with the erg and websockets connection, since it was single-threaded and connecting to the erg was a pain, and constantly having to reset it was an even bigger pain. Now I have finally managed to get the back end code working more or less the way I wanted it to.

Previously it was only possible for a single client application to connect to the websockets back end because the only way I could figure to get it working was to start the thread that updates the erg in the main server process when the client connects. Now, thanks to (what I suppose must be) correct usage of the Python multiprocessing module's Process and Queue classes, I have managed to get messages from a separate process that monitors the erg to the server process and send the messages to all connected clients. Phew! This means that the erg can stay running regardless of what clients connect or disconnect, and many clients can connect and get data from the erg at once. Whilst I don't know what I'm going to do next, this at least makes me feel a little better about it.

Although this was an unexpected headache to figure out, by modifying the websocket server code very slightly, it worked out to be quite simple in the end. I also cleaned up the code, so now I don't mind linking the GitHub repo for anyone that wants to do something with the code... pretty sure that's nobody, but what the hell?

Tuesday, September 9, 2014

RowJam! VR Rowing

Last weekend my friend Edwon and I made a VR rowing game! Here's the video Edwon put together to announce our strange creation.

A few years ago I stopped going rowing in a real boat because of back problems. The rowing part was actually no problem, but because of all the heavy lifting associated with getting a boat into the river, and all the expected work cleaning the rowing club every few weeks, I decided it was best to stop for a bit. I wanted to get a Concept 2 Rowing Erg at home so that I could still do some rowing exercise, but when I eventually did, it just sat idle for long periods of time because I prefer to go hiking or cycling instead of sitting on a stationary erg. I used to listen to TED talks while rowing on the erg to reduce the boredom of moving back and forth and not going anywhere, but after getting too lazy even for that, I decided I needed to do something to liven up using the erg.

Originally I started off by using a small python library written by a Concept 2 forum member to connect my Linux laptop to the machine. I used it to record data, but it wasn't doing anything more than the PM3 monitor attached to the erg, so it seemed quite useless. Since I am a game developer, I thought about gamifying the erg, and making some kind of 3d world through which I could row - I especially wanted to do it after seeing the Oculus Rift become reality and experiencing it for myself. However, a combination of laziness and difficulty in setting up my computer, an Oculus (also, remember that the original OR had giant cables and no positional tracking) and the erg prevented me from doing this. Fortunately, my more proactive friend Edwon got sick of hearing me talk about my idea for a virtual reality rowing game and forced a weekend game jam we named RowJam.

Although I had already managed to connect the erg to my Linux laptop, the setup was quite a pain. Although mac and Linux include Python libraries by default, Windows does not, and not only does Python need to be installed, a couple of usb access libraries need installing, and getting it all working together is not always straightforward. However, I recently changed the backend to be a Python script that uses websockets to send the data to any networked computer that supports the websocket protocol - that means just about ANY computer is capable of connecting to the erg as long as it has a modern web browser, such as Chrome or Firefox, and that also means that simple apps can be developed that are OS agnostic.

So over the two day RowJam, we managed to connect Unity to the erg computer and get data about the amount of power exerted by the user and simulated speed of the boat. This enabled us to use the erg to make a boat in the game move forward, and by adding some simple graphics we were able to fake rowing on water and through space. The head movement was all handled by the Oculus Rift's head tracking and gyros, and although we didn't make everything correctly to scale and had didn't have super amazing graphics in the game, the feeling of being in the boat and looking over the side into the water was really cool.

Unfortunately, the actual rowing part needs a lot of work in order to better sync up the movement of the user in the boat and the movement of the boat. In real rowing your movement in the boat does not affect the boat's movement to a great degree (i.e. if you slide up and down the sliders without pulling the oars), but I'd like to get the boat movement to feel a bit more natural nonetheless. I'd also like to work on a new version that tries to push the feeling of being inside a boat moving through an environment a bit more, try to fix problems with the backend so that many users can connect to the erg at once (even using different applications to visualise data) and try to make it feel a little more interactive than just adding power to the boat through exercise, such as using the Oculus head orientation to steer the boat.

I also have some other ideas for games using the erg that don't require an Oculus or powerful computer. One such idea was to use the erg to level up your character in a game through real exercise. After talking to Edwon about this idea, we thought it would be amazing to use Smart watches with heart rate monitors to detect your real-world activity and level up your game-world character's stats. I haven't looked too far into this, but I think there are already some apps that try to gamify exercise to some extent (achievements and King of the Mountain awards in Strava, for instance), but I'm not yet aware of any game that uses physical exercise as a way to increase your stats, or even generate a (game-world) currency. Anyway, since the backend for the erg now uses websockets, and the amount of things easily achievable in modern web browsers is growing all the time, it should be fun and interesting to try doing more erg based experiments in the future.

Although the source code for the backend is available on GitHub, it's a mess at the moment, so I won't link it :P

I'll make another post as soon as I clean it up and make it work properly. This should just be a case of making the erg thread communicate with the main thread that the clients are connected in, but since I haven't really done any multi-threaded programming where threads need to communicate, it might not be so straightforward... We'll see.Friday, September 5, 2014

Quake Web Tools

A little while ago I wanted to do some Javascript programming and decided it would be cool if I could view the various file formats that are used by Quake 1 (a 20 year old game...) in my web browser. This was partly because I still play around with Quake level editors, and whilst there are multi-platform editors, many of the tools to work with other file types used by Quake don't exist on platforms other than Windows, or are so old they barely work on Windows anymore, so it seemed somewhat worthwhile.

Although the project is far from complete, I have built a drag and drop interface for viewing all of the main binary formats used by Quake (other than .dat, which is used for Quake C code lumps). I've named it Quake Web Tools. It should work perfectly in Chrome, and most of the functionality works in Firefox, although you can't currently extract files from .pak archives in Firefox at the moment (I plan to fix this!). Other browsers are currently untested, but as I work on the real UI and (hopefully) some editing functionality, I plan to do more compatibility testing. It does, however, work on Mac, Windows and Linux since it's just running in a browser.

Currently you can view the following formats:

- .pak - Uncompressed file archive

- .pal - 256 colour palette files

- .wad - Texture/image directories used for textures and console graphics

- .bsp - Game levels, with textured 3d preview and texture listing

- .mdl - Model files, with textured and animated 3d preview

- .spr - Billboard sprites

- .lmp - Graphics lump, simple images.

Planned features:

- Clean up of viewer code as it's currently very hacked together

- Proper UI based using Polymer or home made Web Components library (To learn about Web Components, not just polymer's little bubble).

- View files that are inside others without extracting manually.

- View animated textures and sprites

- .wav player (Quake uses .wav for all its sound.

- .map viewer (And possibly editor at a later date?)

- .wad editing (Add, delete, rename etc. Perhaps image editor?)

- .pak editing (Basic add, remove, move file functionality)

- .lit bsp viewer (Probably quite difficult to do in Three.js? Was enough hassle just to show textures).

Wednesday, September 3, 2014

Rendering Scene Normals in Blender

I recently started working on a game where the lighting requires that normal maps exist for all the environment art, but all the art is hand drawn. Although I've gotten used to painting rough normal maps (it's part of the style, so they can't be too perfect), I briefly tried to figure out how to render a camera relative normal map from arbitrary scenes in Blender. Although I was extremely frustrated at a number of points due to lack of Blender know-how and the time it was taking me to track down the causes of various problems I was having on the internet, I finally managed to do it. I thought it was worth sharing in case anyone else has the same problem.

Because I'm no Blender wizard, I am using a very simple scene setup consisting of a sphere (actually a metaball, because it rendered without distortion at the poles), a plane, and an orthographic camera located directly above the objects and pointed down. The render output size (Render > Dimensions) was set to 1024x1024. The aim of this setup was to produce an image of all the possible normal colours, which I could then sample from when drawing normal maps by hand. In actual fact, I had such an image already, but I wondered how it was created and so ended up fiddling around with Blender!

When you have a scene you would like to render, the first thing to do is make sure that the 'Normal' option is checked in RenderLayer > Passes. This will make sure that Blender will output an image of the scene normals, although at this stage the colours will not be correct; Rendering the scene now will produce the image shown below. The normal colours shown here are what Blender uses, but most other software will expect something very different for normal maps. To fix the colours, we need to modify the output in the node editor.

Open a node editor panel and make sure that you set it to compositing mode with the 'use nodes' option enabled (see image below). Without the use nodes option, you won't see anything in the node editor, and the compositor mode merely allows us to modify rendered output (known as post-processing). Once that is done, we can start to build a node network that will fix the strange colours of our rendered normals.TIP: since we don't need to modify the 3d scene right now, run a render so that the output is visible - the output will update as we change the node network in the next section and it's helpful to see it as we work.

First, create two new 'Mix' nodes (Add (shift+a) > Color > Mix). The mix node takes two inputs and mixes them together using a blending mode similar to what you might have used in Photoshop or Gimp to produce an output. This allows us to use mix to modify the colours of our rendered image. Click on the drop down menu (unlabelled, but this is the blending mode) on the first mix node and change it to Multiply, then change the second node to Add. For both nodes, click on either one of the two colour tabs that appear left of the 'image' input labels and change the colour to neutral grey (0.5, 0.5, 0.5) - this means that we will Multiply and Add this colour to each pixel of the image that comes in from the 'RenderLayer' node. Now the nodes need to be connected together. Connect the leftmost 'RenderLayer' node's 'Normal' output to the 'image' input of the 'Multiply' node, then connect the 'image' output of that to the 'image' input of the 'Add' node. Now connect the 'image' output of the 'Add' node to the 'image' input of the 'Composite' node on the right. If you check the render output, you will notice that the colours are still wrong. To fix this, we need to add an 'Invert' node (Add > Color > Invert). Add the 'Invert' node between the 'Add' and 'Composite' nodes and the colours of the output should magically be fixed!

NOTE: If you are rendering with cycles, you don't need the invert node. I don't know why this is, but I recommend using Blender Internal because it seems much faster for rendering normals in this way.

However, there's something still not quite right about the result... the image looks a little bit light perhaps? What's going on? Well, after much searching around on the internet, I found out that blender uses gamma correction by default on all renders unless it is disabled manually - this even gets applied to our normals, which means we are not getting the real normal colours. This would be a disaster, if there were not an easy way to disable it. Luckily, there is so, go into Scene > Color Management and make sure 'Render' is set to 'RAW'. If the output didn't change instantly, render again and you should notice that the output is significantly darker than before.

That's it! We are now rendering the normals of the scene relative to the camera and getting the correct colours. If there is anything that is unclear, don't be afraid to ask in the comments, and as I have found, Blender Stack Exchange is an invaluable resource, so check it out.

UPDATE: I just noticed that there is a great function that allows you to display your scene using a special material that gives you the CORRECT normal colours real-time in the viewport. First open the panel that appears on the right of the 3d view by pressing 'n'. In here there are some options under the heading 'Shading'. From within this menu, first enable 'GLSL', then check 'matcap' and click the image that appears. This will open up a menu of different materials that you can use to render your scene in the viewport. Select the material shown below, and you will have a real-time normal render in the viewport! There are also lots of other cool materials to play with.